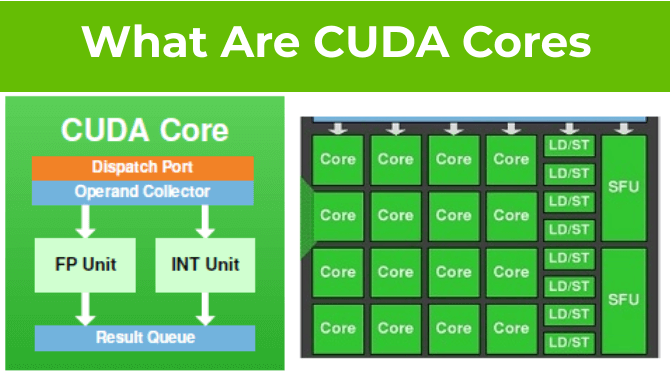

What are NVIDIA CUDA Cores?

NVIDIA CUDA cores are the fundamental processing units in NVIDIA GPUs, designed to perform general-purpose computations.

Some key points about CUDA cores:

- Architecture: CUDA cores are the building blocks of NVIDIA GPU processors. They are designed to perform a wide range of floating-point and integer operations in parallel.

- Parallelism: CUDA cores are organized into groups called Streaming Multiprocessors (SMs), which enable parallel processing of large-scale data workloads.

- Performance: The number of CUDA cores in a GPU is a key indicator of its overall computational power. More CUDA cores typically result in higher performance for tasks such as rendering, scientific computing, image/video processing, and machine learning inference.

- Programming: CUDA cores can be programmed using the CUDA programming model, allowing developers to leverage the parallel processing capabilities of NVIDIA GPUs for general-purpose computations.

What are NVIDIA Tensor Cores?

NVIDIA Tensor cores are specialized processing units found in NVIDIA GPUs, designed to accelerate deep learning and machine learning tasks.

Key characteristics of Tensor cores:

- Architecture: Tensor cores are specifically optimized for performing matrix operations, which are a fundamental component of neural network computations.

- Deep Learning Acceleration: Tensor cores can perform 4×4 matrix multiply-accumulate (GEMM) operations in a single instruction, providing significant performance boosts for deep learning training and inference.

- Specialized Instructions: Tensor cores use specialized hardware and instructions to achieve higher throughput for matrix operations central to deep learning algorithms.

- Performance: The number of Tensor cores in a GPU is a key indicator of its deep learning performance. More Tensor cores generally translate to faster deep learning training and inference.

- Power Efficiency: Tensor cores are more power-efficient than general-purpose CUDA cores when handling deep learning workloads, making them better suited for data center and edge deployment scenarios.

- Programming: Tensor cores can be programmed using the CUDA programming model and deep learning frameworks like TensorFlow and PyTorch, which automatically leverage Tensor core acceleration.

CUDA Cores vs Tensor Cores: What’s the Difference?

- CUDA cores provide the basic computational power for general-purpose GPU tasks, while Tensor cores are specialized processing units designed to accelerate matrix operations crucial for deep learning and machine learning workloads.

- CUDA cores are general-purpose processing units, while Tensor cores are optimized for deep learning matrix operations.

- CUDA cores excel at a broader range of floating-point operations, while Tensor cores are optimized for the specific matrix operations found in deep learning.

- The number of CUDA cores in a GPU indicates its overall computational power, while the number of Tensor cores reflects its deep learning performance.

- Modern NVIDIA GPUs like the H100 contain both CUDA cores and Tensor cores to provide a balance between general-purpose and deep learning-specific acceleration.

How to Choose a GPU: More CUDA Cores or More Tensor Cores?

- General-Purpose Computing Performance

- GPUs with more CUDA cores generally have higher performance for general-purpose parallel computing tasks like scientific simulations, image/video processing, and non-deep learning workloads.

- The more CUDA cores, the greater the raw floating-point computational power the GPU can deliver for these types of workloads.

- Deep Learning Training and Inference Performance

- GPUs with more Tensor cores generally have higher performance for deep learning training and inference tasks.

- Tensor cores are specifically designed to accelerate matrix multiplication and other linear algebra operations that are central to neural network computations.

- The more Tensor cores a GPU has, the faster it can perform deep learning computations, leading to higher throughput for training and inference.

- Power Efficiency

- Tensor cores are more power-efficient than general-purpose CUDA cores for deep learning workloads.

- GPUs with more Tensor cores often achieve higher deep learning performance per watt compared to GPUs relying more on CUDA cores.

- This power efficiency can be important in data center and edge deployment scenarios where power consumption is a key concern.

- Workload Specialization

- GPUs with more CUDA cores are better suited for a wider range of general-purpose parallel computing tasks beyond just deep learning.

- GPUs with more Tensor cores are more specialized for deep learning and may not perform as well on non-deep learning workloads.

In summary, the balance between CUDA cores and Tensor cores in a GPU determines its strengths—more CUDA cores favor general-purpose computing, while more Tensor cores favor deep learning performance and efficiency. Choosing the right GPU depends on the specific mix of workloads that need to be accelerated.